Probability

Event:

A subset of the sample space

associated with a random experiment is called an event or a case.

e.g. In tossing a coin, getting either head or tail is

an event.

Equally Likely Events:

The given events are said to be

equally likely if none of them is expected to occur in preference to the other.

e.g. In throwing an unbiased die, all the six faces

are equally likely to come.

Mutually Exclusive Events:

A set of events is said to be mutually

exclusive, if the happening of one excludes the happening of the other, i.e. if

A and B are mutually exclusive, then (A ∩ B) = Φ

e.g. In throwing a die, all the 6 faces numbered 1 to 6 are mutually exclusive,

since if any one of these faces comes, then the possibility of others in the

same trial is ruled out.

Exhaustive Events:

A set of events is said to be

exhaustive if the performance of the experiment always results in the

occurrence of at least one of them.

If E1, E2,

, En are exhaustive events, then E1 ∪ E2 ∪

∪ En =

S.

e.g. In throwing of two dice, the exhaustive number of cases is 62 =

36. Since any of the numbers 1 to 6 on the first die can be associated with any

of the 6 numbers on the other die.

Complement of an Event:

Let A be an event in a sample space

S, then the complement of A is the set of all sample points of the space other

than the sample point in A and it is denoted by Aor ![]() .

.

i.e. A = {n : n ∈ S, n ∉ A]

Note:

(i) An operation which results in some well-defined

outcomes is called an experiment.

(ii) An experiment in which the outcomes may not be the same even if the

experiment is performed in an identical condition is called a random

experiment.

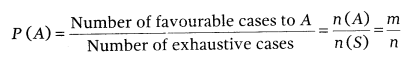

Probability of an Event

If a trial result is n exhaustive, mutually exclusive

and equally likely cases and m of them are favourable to the happening of an

event A, then the probability of happening of A is given by

Note:

(i) 0 ≤ P(A) ≤ 1

(ii) Probability of an impossible event is zero.

(iii) Probability of certain event (possible event) is 1.

(iv) P(A ∪ A) = P(S)

(v) P(A ∩ A) = P(Φ)

(vi) P(A) = P(A)

(vii) P(A ∪ B) = P(A) + P(B) P(A ∩ S)

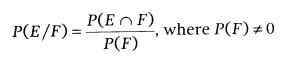

Conditional Probability:

Let E and F be two events associated

with the same sample space of a random experiment. Then, probability of

occurrence of event E, when the event F has already occurred, is called a

conditional probability of event E over F and is denoted by P(E/F).

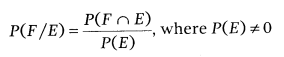

Similarly, conditional probability of event F over E is given as

Properties of Conditional

Probability:

If E and E are two events of sample space

S and G is an event of S which has already occurred such that P(G) ≠ 0,

then

(i) P[(E ∪ F)/G] = P(F/G) + P(F/G) P[(F

∩ F)/G], P(G) ≠ 0

(ii) P[(E ∪ F)/G] = P(F/G) + P(F/G), if E and F

are disjoint events.

(iii) P(F/G) = 1 P(F/G)

(iv) P(S/E) = P(E/E) = 1

Multiplication Theorem:

If E and F are two events associated

with a sample space S, then the probability of simultaneous occurrence of the

events E and F is

P(E ∩ F) = P(E) . P(F/E), where P(F) ≠ 0

or

P(E ∩ F) = P(F) . P(F/F), where P(F) ≠ 0

This result is known as multiplication rule of probability.

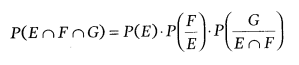

Multiplication Theorem for More than

Two Events: If F, F and G are three events of sample space, then

Independent Events:

Two events E and F are said to be

independent, if probability of occurrence or non-occurrence of one of the

events is not affected by that of the other. For any two independent events E

and F, we have the relation

(i) P(E ∩ F) = P(F) . P(F)

(ii) P(F/F) = P(F), P(F) ≠ 0

(iii) P(F/F) = P(F), P(F) ≠ 0

Also, their complements are independent events,

i.e. P(![]() ∩

∩ ![]() )

= P(

)

= P(![]() )

. P(

)

. P(![]() )

)

Note: If E and F are dependent events, then P(E ∩ F) ≠ P(F) . P(F).

Three events E, F and G are said to

be mutually independent, if

(i) P(E ∩ F) = P(E) . P(F)

(ii) P(F ∩ G) = P(F) . P(G)

(iii) P(E ∩ G) = P(E) . P(G)

(iv)P(E ∩ F ∩ G) = P(E) . P(F) . P(G)

If atleast one of the above is not true for three

given events, then we say that the events are not independent.

Note: Independent and mutually exclusive events do not have the same meaning.

Bayes Theorem and Probability

Distributions

Partition of Sample Space: A set of

events E1, E2,

,En is

said to represent a partition of the sample space S, if it satisfies the

following conditions:

(i) Ei ∩

Ej = Φ; i

≠ j; i, j = 1, 2,

.. n

(ii) E1 ∪ E2 ∪

∪ En =

S

(iii) P(Ei) > 0, ∀ i = 1, 2,

, n

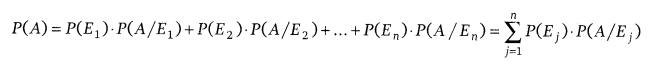

Theorem of Total Probability:

Let events E1, E2,

, En form a

partition of the sample space S of an experiment.If A

is any event associated with sample space S, then

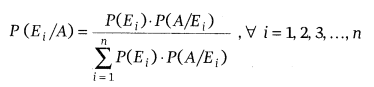

Bayes Theorem:

If E1, E2,

,En are n non-empty events which constitute

a partition of sample space S, i.e. E1, E2,

, En are pairwise disjoint E1 ∪ E2 ∪

. ∪ En =

S and P(Ei) > 0, for all i = 1, 2,

.. n Also, let A be any

non-zero event, the probability

Random Variable:

A random variable is a real-valued

function, whose domain is the sample space of a random experiment. Generally,

it is denoted by capital letter X.

Note: More than one random variables can be defined in the same sample space.

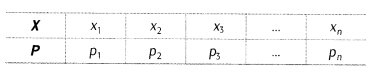

Probability Distributions:

The system in which the values of a

random variable are given along with their corresponding probabilities is

called probability distribution.

Let X be a random variable which can take n values x1, x2,

, xn.

Let p1, p2,

, pn be the respective probabilities.

Then, a probability distribution table is given as follows:

such that P1 + p2 +

P3 +

+ pn = 1

Note: If xi is one of the possible values of a random variable

X, then statement X = xi is true only at some point(s) of the

sample space. Hence ,the probability that X takes

value x, is always non-zero, i.e. P(X = xi) ≠ 0

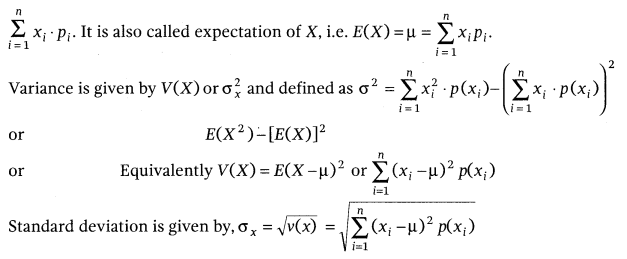

Mean and Variance of a Probability

Distribution:

Mean of a probability distribution is

Bernoulli Trial:

Trials of a random experiment are

called Bernoulli trials if they satisfy the following conditions:

(i) There should be a finite number of trials.

(ii) The trials should be independent.

(iii) Each trial has exactly two outcomes, success or failure.

(iv) The probability of success remains the same in

each trial.

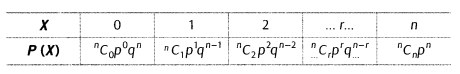

Binomial Distribution:

The probability distribution of

numbers of successes in an experiment consisting of n Bernoulli trials obtained

by the binomial expansion (p + q)n, is called binomial

distribution.

Let X be a random variable which can take n values x1, x2,

, xn. Then, by

binomial distribution, we have P(X = r) = nCr prqn-r

where,

n = Total number of trials in an experiment

p = Probability of success in one trial

q = Probability of failure in one trial

r = Number of success trial in an experiment

Also, p + q = 1

Binomial distribution of the number of successes X can be represented as

Mean and Variance of Binomial

Distribution

(i) Mean(μ) = Σ xipi =

np

(ii) Variance(σ2) = Σ xi2 pi

μ2 = npq

(iii) Standard deviation (σ) = √Variance = √npq

Note: Mean > Variance