Applications

Of Matrices And Determinants

In this chapter, we present some applications of

matrices in solving system of linear equations. To be specific, we study four

methods, namely

i)Matrix

inversion method,

(ii) Cramer’s rule

(iii)

Gaussian elimination method, and

(iv) Rank method.

Before

knowing these methods, we introduce the following:

(i)

Inverse of a non-singular square Inverse of a Matrix

The

multiplicative inverse of a square matrix is called its inverse matrix. If a

matrix AA has an inverse, then AA is said to be nonsingular or

invertible. A singular matrix does not have an inverse.

To find the inverse of a square matrix AA , you need to find a

matrix A−1A−1 such that the product

of AA and A−1A−1 is the identity matrix.

In other words, for every square

matrix AA which is nonsingular there exist an inverse matrix, with

the property that, AA−1=A−1A=IAA−1=A−1A=I , where II is the identity matrix of the

appropriate size.

Method 1:

Let AA be an n×n matrix.

Write the doubly augmented

matrix [A | In] .

Apply elementary row operations to write

the matrix in reduced row-echelon form.

Decide whether the matrix A is

invertible (nonsingular).

If A can be reduced to the

identity matrix In ,

then A−1 is the matrix on the right of the transformed augmented

matrix.

If A cannot be reduced to the

identity matrix, then A is singular.

Method 2:

You may use the following formula when

finding the inverse of n×n matrix.

If A is non-singular matrix,

there exists an inverse which is given by ,

where | A || A | is the determinant of the

matrix.

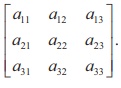

The Classical Adjoin of a Square Matrix :

Let A = [ a ij ]

be a square matrix. The transpose of the matrix whose ( i, j) entry is

the a ij cofactor is called the

classical adjoint of A:

![]()

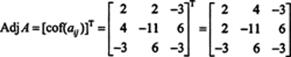

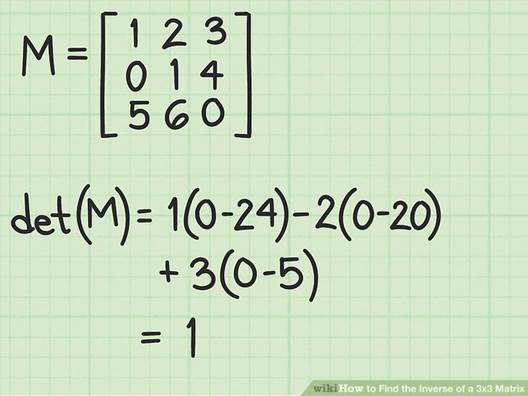

Example 1:

Find the adjoint of

the matrix

Solution:

The first step is to evaluate the cofactor of

every entry:

Therefore,

![]()

Why form the adjoint

matrix?

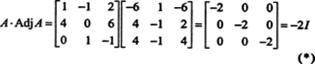

First, verify the following calculation where

the matrix A above is multiplied by its adjoint:

----------------------------------(*)

----------------------------------(*)

Now, since a Laplace expansion by the first column of A gives

equation

(*) becomes

![]()

This result gives the following equation for

the inverse of A:

![]()

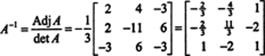

Example 2:

Determine the inverse of the following matrix

by first computing its adjoin:

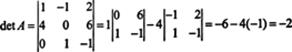

Solution:

First, evaluate the cofactor of each entry

in A:

These computations imply that

Now, since Laplace expansion along the first

row gives

the

inverse of A is

which

may be verified by checking that AA −1 = A −1 A = I.

Example 3:

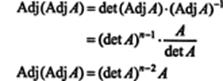

If A is an invertible n by n matrix, compute the

determinant of Adj A in terms of det A.

Solution:

Because A is invertible, the

equation A −1 =

Adj A/det A implies

![]()

Recall that if B is n x n and k is a scalar,

then det( kB) = k n det B. Applying this formula with k = det A and B = A −1 gives

![]()

Thus,

![]()

Example 4:

Show that the adjoin

of the adjoin of A is

guaranteed to equal A if A is an invertible 2 by 2

matrix, but not if A is

an invertible square matrix of higher order.

Solution:

First, the equation A · Adj A = (det A) I can be

rewritten

![]()

which

implies

![]()

Next, the equation A · Adj A = (det A) I also implies

![]()

This expression, along with the result of

Example 3, transforms (*) into

where n is the size

of the square matrix A. If n = 2, then (det A) n−2 =

(det A) 0 = 1—since det A ≠ 0—which implies Adj

(Adj A) = A, as desired. However, if n > 2, then

(det A) n−2 will not equal 1 for

every nonzero value of det A, so Adj (Adj A) will not

necessarily equal A.

Yet this proof does show that whatever the size of the matrix, Adj (Adj A) will equal A if

det A = 1.

The relation between adjoint

and inverse of a matrix :

To find the inverse of a matrix A, i.e A-1 we shall first define the adjoint of a matrix. Let

A be an n x n matrix. The (i,j)

cofactor of A is defined to be

Aij =

(-1)ij det(Mij),

where

Mij is the (i,j)th minor matrix obtained from A after

removing the ith row and jth

column. Let’s consider the n x n matrix A = (Aij) and

define the n x n matrix Adj(A) = AT. The matrix Adj(A) is called the adjoint of matrix A. When A is invertible, then its

inverse can be obtained by the formula given below.

The inverse is defined only for non-singular

square matrices. The following relationship holds between a matrix and its

inverse:

AA-1 = A-1A = I,

where I is the identity matrix

Theorems On Ad joint And Inverse Of A Matrix

Theorem 1

If A be any given square matrix of order n,

then A adj(A)

= adj(A) A = |A|I, where I is the identitiy

matrix of order n.

Proof:

Let

Since the sum of the product of elements of a

row (or a column) with corresponding cofactors is equal to |A| and otherwise

zero, we have

Similarly, we can show that adj(A) A

= |A| I

Hence, A adj(A) = adj(A)

A

Theorem 2

If A and B are

non-singular matrices of the same order, then AB and BA are also non-singular

matrices of the same order.

Theorem 3

The determinant of the product matrices is

equal to the product of their respective determinants, that is, |AB| = |A||B|,

where A and B are square matrices of the same order.

Remark:

We know that

Writing determinants of matrices on both

sides, we have

i.e,

i.e,

|adj(A)|

|A| = |A|3

or |adj(A)| = |A|2

In general, if A is a quare

matrix of order n, then |adj(A)| = |A|n-1.

Theorem 4

A square matrix A is invertible if and only

if A is a non-singular matrix.

Proof:

Let A be an

invertible matrix of order n and I be the identity matrix of the same order.

Then there exists a square matrix B of order n such that AB = BA = I. Now, AB =

I.

So |A| |B| = |I| = 1 (since |I| = 1 and |AB|

= |A| |B|). This gives |A| to be a non-zero value. Hence A is a non-singular

matrix. Conversely, let A be a non-singular matrix, then |A| is non-zero. Now A

adj(A)

= adj(A) A = |A| I (Theorem 1). Or,

or AB = BA

= I, where

Thus A is invertible and

Creating the Adjugate Matrix to Find

the Inverse Matrix:

![]()

Properties

of Inverse Matrices:

If A is

nonsingular, then so is A -1 and (A -1) -1 = A

If A and

B are nonsingular matrices, then AB is nonsingular and (AB) -1 = B-1 A -1

If A is

nonsingular then (AT)-1 = (A-1)T

If A and B are

matrices with AB=In then A and B are

inverses of each other.

Notice

that the fourth property implies that if AB = I then BA = I

Let A, A1 and A2 be n×n matrices, the following statements are true:

1. If A-1 = B, then A (col

k of B) = ek

2. If A has an inverse matrix, then there is only one inverse

matrix.

3. If A1 and A2 have

inverses, then A1 A2 has an inverse

and (A1 A2)-1 = A1-1 A2-1

4. If A has an inverse, then x = A-1d is

the solution of Ax = d and this is the only solution.

5. The following are equivalent:

(i) A has an inverse.

(ii) det (A) is

not zero.

(iii) Ax = 0 implies x = 0.

If c is any non-zero scalar then cA is

invertible and (cA)-1 = A-1/c.

For n = 0, 1, 2…, An is invertible and (An)-1 =

A-n = (A-1)n.

If A is a square matrix and n > 0 then:

A-n = (A-1)n

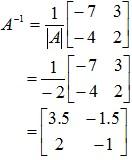

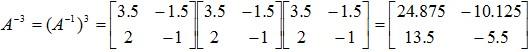

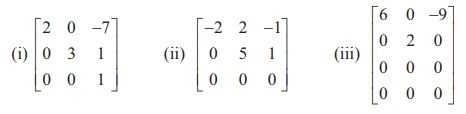

Example 1:

Compute A-3 for the matrix:

Solution:

First of all, we

need to find the inverse of the given matrix. The method to find the inverse is

only applicable for 2 × 2 matrices.

Steps are as follows:

[1] Interchange leading diagonal elements:

-7 → 2

2 → -7

[2] Change signs of the other 2 elements:

-3 → 3

4 → -4

[3] Find the determinant |A|

[4] Multiply result of [2] by 1/|A|

Now:

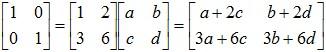

Example 2:

Given the matrix A verify

that the indicated matrix is in fact the inverse.

Solution:

To verify that we do in fact have the inverse we’ll need to check the condition

AA-1 = A-1A = I

Now we check whether AA-1 = A-1A = I:

Hence the required is verified.

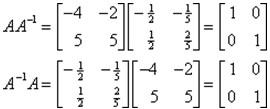

Example 3:

Let A be the 2 × 2

matrix,

Show that A has no inverse.

Solution:

An inverse for A must be a 2

× 2 matrix.

Let  such

that AB = BA = I. If such a matrix B exists, it

must satisfy the following equation:

such

that AB = BA = I. If such a matrix B exists, it

must satisfy the following equation:

The preceding equation requires that:

a + 2c = 1 and 3a + 6c = 0

which is clearly impossible, so we can conclude that A has no inverse.

Left cancellation property

& the right cancellation property :

In mathematics, the notion of cancellative is

a generalization of the notion of invertible.

![]() An element a in a magma (M, ∗) has

the left cancellation property (or

is left-cancellative) if for all b and c in M, a ∗ b = a ∗ c always

implies that b = c.

An element a in a magma (M, ∗) has

the left cancellation property (or

is left-cancellative) if for all b and c in M, a ∗ b = a ∗ c always

implies that b = c.

![]() An element a in

a magma (M, ∗) has

the right cancellation property (or

is right-cancellative) if for all b and c in M, b ∗ a = c ∗ a always implies

that b = c.

An element a in

a magma (M, ∗) has

the right cancellation property (or

is right-cancellative) if for all b and c in M, b ∗ a = c ∗ a always implies

that b = c.

![]() An element a in

a magma (M, ∗) has

the two-sided cancellation property (or

is cancellative) if it is both left- and right-cancellative.

An element a in

a magma (M, ∗) has

the two-sided cancellation property (or

is cancellative) if it is both left- and right-cancellative.

![]() A magma (M,

∗) has

the left cancellation property (or is left-cancellative) if all a in

the magma are left cancellative, and similar definitions apply for the right

cancellative or two-sided cancellative properties.

A magma (M,

∗) has

the left cancellation property (or is left-cancellative) if all a in

the magma are left cancellative, and similar definitions apply for the right

cancellative or two-sided cancellative properties.

![]() A left-invertible element is

left-cancellative, and analogously for right and two-sided.

A left-invertible element is

left-cancellative, and analogously for right and two-sided.

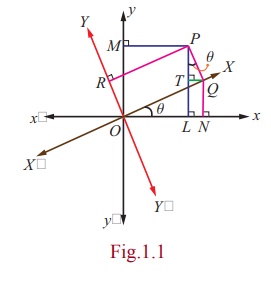

Application of matrices to

geometry :

There

is a special type of non-singular matrices which are widely used in

applications of matrices to geometry. For simplicity, we consider

two-dimensional analytical geometry.

Let O be the

origin, and x 'O x and y 'Oy be

the x -axis and y -axis. Let P be

a point in the plane whose coordinates are (x, y) with

respect to the coordinate system. Suppose that we rotate the x -axis and

y -axis about the origin, through an angle θ as shown in the figure. Let X 'OX and Y 'OY be

the new X -axis and new Y -axis. Let ( X ,Y ) be the

new set of coordinates of P with respect to the new

coordinate system. Referring to Fig.1.1,

we get

x

= OL = ON − LN = X cos θ – QT = X cos

θ − Y sin θ ,

y

= PL = PT + TL = QN + PT = X sin θ + Y cos

θ .

These equations provide transformation

of one coordinate system into another coordinate system.

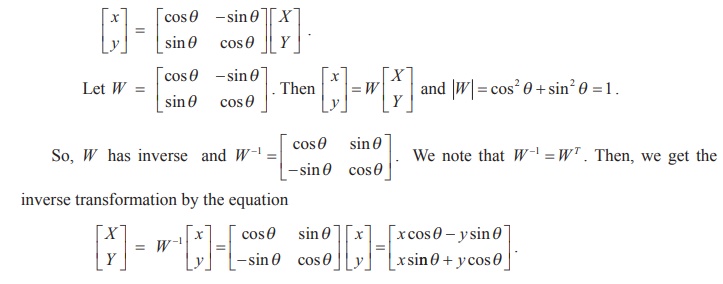

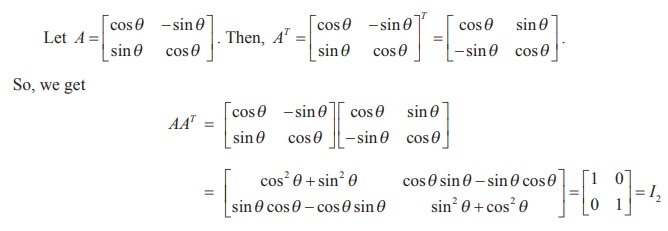

The above two equations can be written

in the matrix form

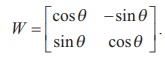

Hence, we get the transformation X = x cosθ - y sinθ , Y = x sinθ + y cosθ .

This transformation is used in Computer Graphics and determined by the

matrix

We note that the matrix W satisfies

a special property W -1 = WT ; that is, WW T = WTW =I

Definition 1.3

A

square matrix A is called orthogonal if AAT = ATA = I.

Note

A is orthogonal if and only if A is

non-singular and A−1 = AT .

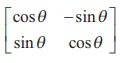

Example

1.11

Prove

that  is orthogonal.

is orthogonal.

Solution

Similarly,

we get ATA = I2 . Hence AAT =

ATA = I2 ⇒ A is orthogonal.

Application of matrices to Cryptography :

One of the important applications of inverse of a

non-singular square matrix is in cryptography. Cryptography is an art of

communication between two people by keeping the information not known to

others. It is based upon two factors, namely encryption and decryption. Encryption means the process of

transformation of an information (plain form) into an unreadable form (coded

form). On the other hand, Decryption means the transformation of the coded message back into original form.

Encryption and decryption require a secret technique which is known only to the

sender and the receiver.

This secret is called a key. One way of generating a key is by using a non-singular matrix

to encrypt a message by the sender. The receiver decodes (decrypts) the message

to retrieve the original message by using the inverse of the matrix. The matrix

used for encryption is called encryption matrix (encoding matrix) and that used for decoding is called decryption matrix (decoding matrix).

We

explain the process of encryption and decryption by means of an example.

Suppose

that the sender and receiver consider messages in alphabets A − Z only, both assign the numbers 1-26 to the

letters A − Z respectively, and the number 0 to a blank

space. For simplicity, the sender employs a key as post-multiplication by a

non-singular matrix of order 3 of his own choice. The receiver uses post-multiplication

by the inverse of the matrix which has been chosen by the sender.

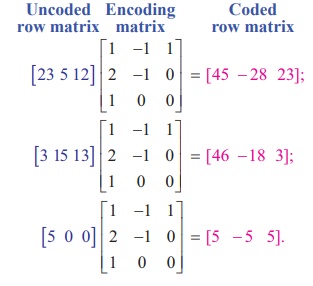

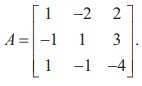

Let

the encoding matrix be

Let

the message to be sent by the sender be “WELCOME”.

Since

the key is taken as the operation of post-multiplication by a square matrix of

order 3, the message is cut into pieces (WEL), (COM), (E), each of length 3, and

converted into a sequence of row matrices of numbers:

[23 5 12],[3 15 13],[5 0 0].

Note

that, we have included two zeros in the last row matrix. The reason is to get a

row matrix with 5 as the first entry.

Next,

we encode the message by post-multiplying each row matrix as given below:

So

the encoded message is [45 − 28 −23] [46 -18 3] [

5 −5 5]

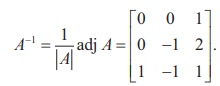

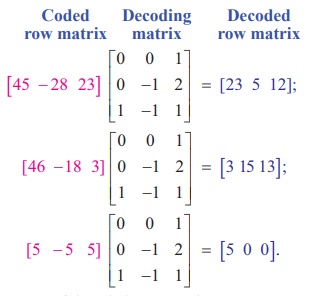

The

receiver will decode the message by the reverse key, post-multiplying by the

inverse of A.

So

the decoding matrix is

The

receiver decodes the coded message as follows:

So,

the sequence of decoded row matrices is [23

5 12] , [3 15 13], [5 0 0].

Thus,

the receiver reads the message as “WELCOME”

Elementary Transformations of a

Matrix

A

matrix can be transformed to another matrix by certain operations called

elementary row operations and elementary column

operations.

Elementary row and column operations

Elementary

row (column) operations on a matrix are as follows:

![]() The interchanging of any two

rows (columns) of the matrix

The interchanging of any two

rows (columns) of the matrix

![]() Replacing a row (column) of

the matrix by a non-zero scalar multiple of the row (column) by a non-zero

scalar.

Replacing a row (column) of

the matrix by a non-zero scalar multiple of the row (column) by a non-zero

scalar.

![]() Replacing a row (column) of

the matrix by a sum of the row (column) with a non-zero scalar multiple of

another row (column) of the matrix.

Replacing a row (column) of

the matrix by a sum of the row (column) with a non-zero scalar multiple of

another row (column) of the matrix.

Elementary

row operations and elementary column operations on a matrix are known as

elementary transformations.

We

use the following notations for elementary row transformations:

i. Interchanging of ith and jth rows

is denoted by Ri ↔ Rj .

ii. The multiplication of each

element of ith row by a

non-zero constant λ is denoted by Ri → λ Ri .

iii. Addition to ith row, a non-zero constant λ multiple

of jth row is denoted

by Ri → Ri + λ Rj .

Similar notations are used for elementary column transformations.

Definition 1.4

Two

matrices A and B of same order are said to be

equivalent to one another if one can be obtained from the other by the

applications of elementary transformations. Symbolically, we write A ~ B to

mean that the matrix A is equivalent to the matrix B .

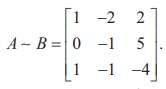

For

instance, let us consider a matrix

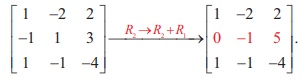

After

performing the elementary row operation R2 → R2 + R1 on A , we get a matrix B in which

the second row is the sum of the second row in A and the first

row in A .

Thus,

we get

The

above elementary row transformation is also represented as follows:

Note

An

elementary transformation transforms a given matrix into another matrix which

need not be equal

to the given matrix.

Row-Echelon form

Using the row elementary operations, we can transform a given

non-zero matrix to a simplified form called a Row-echelon

form. In a row-echelon form, we may

have rows all of whose entries are zero. Such rows are called zero rows. A non-zero row is one in

which at least one of the entries is not zero. For instance, in the matrix,

,

,

R1 and R2 are non-zero rows

and R3 is a zero row

Definition 1.5

A

non-zero matrix E is said to be in a row-echelon form if:

i. All zero rows of E occur below every non-zero

row of E.

ii. The first non-zero element in any row i of E occurs

in the jth column

of E , then all other entries in

the jth column of E below

the first non-zero element of row i are

zeros.

iii. The first non-zero entry in the ith

row of E lies to the left of the first non-zero entry in (i +1)th row of E .

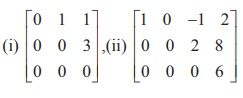

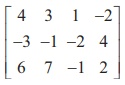

The

following matrices are in row-echelon form:

Consider

the matrix in (i). Go up row by row from the last row

of the matrix. The third row is a zero row. The first non-zero entry in the

second row occurs in the third column and it lies to the right of the first

non-zero entry in the first row which occurs in the second column. So the

matrix is in row- echelon form.

Consider

the matrix in (ii). Go up row by row from the last row of the matrix. All the

rows are non-zero rows. The first non-zero entry in the third row occurs in the

fourth column and it occurs to the right of the first non-zero entry in the

second row which occurs in the third column. The first non-zero entry in the

second row occurs in the third column and it occurs to the right of the first

non-zero entry in the first row which occurs in the first column. So the matrix

is in row-echelon form.

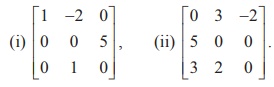

The

following matrices are not in row-echelon form:

Consider

the matrix in (i). In this matrix, the first non-zero

entry in the third row occurs in the second column and it is on the left of the

first non-zero entry in the second row which occurs in the third column. So the

matrix is not in row-echelon form.

Consider

the matrix in (ii). In this matrix, the first non-zero entry in the second row

occurs in the first column and it is on the left of the first non-zero entry in

the first row which occurs in the second column. So the matrix is not in

row-echelon form.

Method to reduce a matrix [aij]m ×n to a row-echelon form.

Step 1

Inspect

the first row. If the first row is a zero row, then the row is interchanged

with a non-zero row below the first row. If a11 is

not equal to 0, then go to step 2. Otherwise, interchange the first row R1 with

any other row below the first row which has a non-zero element in the first

column; if no row below the first row has non-zero entry in the first column,

then consider a12 .

If a12 is not equal to 0, then go to step 2.

Otherwise, interchange the first row R1 with any

other row below the first row which has a non-zero element in the second

column; if no row below the first row has non-zero entry in the second column,

then consider a13. Proceed in the same way till we get a

non-zero entry in the first row. This is called pivoting and the first non-zero

element in the first row is called the pivot of the first row.

Step 2

Use

the first row and elementary row operations to transform all elements under the

pivot to become zeros.

Step 3

Consider

the next row as first row and perform steps 1 and 2 with the rows below this

row only.

Repeat

the step until all rows are exhausted.

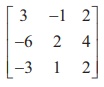

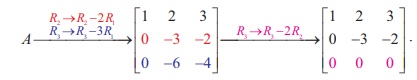

Example 1.13

Reduce

the matrix  to a row-echelon form.

to a row-echelon form.

Solution

Note

This

is also a row-echelon form of the given matrix.

So,

a row-echelon form of a matrix is not necessarily unique.

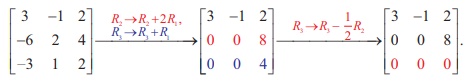

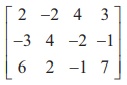

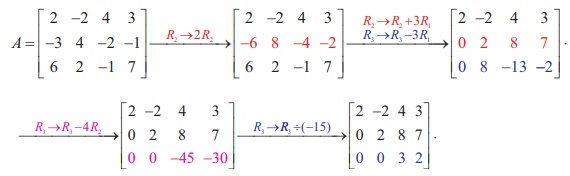

Example 1.14

Reduce

the matrix  to a row-echelon form.

to a row-echelon form.

Solution

Rank of a Matrix

To define the rank of a matrix, we have to know about sub-matrices

and minors of a matrix.

Let A be a given matrix. A matrix obtained by

deleting some rows and some columns of A is called a sub-matrix of A. A matrix

is a sub-matrix of itself because it is obtained by leaving zero number of rows

and zero number of columns.

Recall

that the determinant of a square sub-matrix of a matrix is called a minor of

the matrix.

Definition

1.6

The rank of a matrix A is defined as the order of a highest order non-vanishing minor of

the matrix A. It is denoted by the symbol ρ (A). The rank of a zero matrix is defined to be 0.

Note

![]() If a matrix contains

at-least one non-zero element, then ρ ( A) ≥ 1.

If a matrix contains

at-least one non-zero element, then ρ ( A) ≥ 1.

![]() The rank of the identity

matrix In is n.

The rank of the identity

matrix In is n.

![]() If the rank of a

matrix A is r, then there exists at-least one

minor of A of order r which does not vanish

and every minor of A of order r +1

and higher order (if any) vanishes.

If the rank of a

matrix A is r, then there exists at-least one

minor of A of order r which does not vanish

and every minor of A of order r +1

and higher order (if any) vanishes.

![]() If A is

an m × n matrix, then ρ (A) ≤ min{m, n} =

minimum of m, n.

If A is

an m × n matrix, then ρ (A) ≤ min{m, n} =

minimum of m, n.

![]() A square matrix A of

order n has inverse if and only if ρ ( A) = n.

A square matrix A of

order n has inverse if and only if ρ ( A) = n.

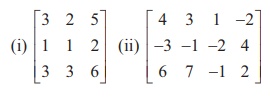

Example 1.15

Find

the rank of each of the following matrices:

Solution

(i) Let A = . Then A is a matrix of order 3× 3. So ρ(A)

≤ min {3, 3} = 3. The highest order

of minors of A is 3 . There is only one third order minor of A

.

. Then A is a matrix of order 3× 3. So ρ(A)

≤ min {3, 3} = 3. The highest order

of minors of A is 3 . There is only one third order minor of A

.

It

is  = 3 (6− 6) − 2

(6−6) + 5 (3 − 3) = 0. So, ρ(A)

< 3.

= 3 (6− 6) − 2

(6−6) + 5 (3 − 3) = 0. So, ρ(A)

< 3.

Next

consider the second-order minors of A .

We

find that the second order minor  = 3 − 2 = 1 ≠ 0 . So ρ(A) = 2 .

= 3 − 2 = 1 ≠ 0 . So ρ(A) = 2 .

(ii)

Let A =  . Then A is a matrix of order 3×4 . So ρ(A) ≤ min {3, 4} = 3.

. Then A is a matrix of order 3×4 . So ρ(A) ≤ min {3, 4} = 3.

The

highest order of minors of A is 3 . We search for a

non-zero third-order minor of A . But

we find that all of them

vanish. In fact, we have

So,

ρ(A) < 3. Next, we search for a

non-zero second-order minor of A .

We

find that  = -4+9 =5 ≠ 0 . So, ρ(A) = 2 .

= -4+9 =5 ≠ 0 . So, ρ(A) = 2 .

Remark

Finding

the rank of a matrix by searching a highest order non-vanishing minor is quite

tedious when the order of the matrix is quite large. There is another easy

method for finding the rank of a matrix even if the order of the matrix is

quite high. This method is by computing the rank of an equivalent row-echelon

form of the matrix. If a matrix is in row-echelon form, then all entries below

the leading diagonal (it is the line joining the positions of the diagonal

elements a11 , a22 , a33 ,.

of the matrix) are zeros. So, checking whether a minor is zero or not, is quite

simple.

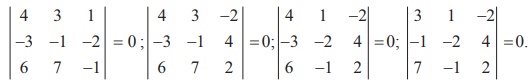

Example 1.16

Find

the rank of the following matrices which are in row-echelon form

:

Solution

(i) Let A =  . Then A is a matrix of order 3 3 × and ρ(A)

≤ 3

. Then A is a matrix of order 3 3 × and ρ(A)

≤ 3

The

third order minor |A| =  = (2) (3)(

1) = 6 ≠ 0 . So, ρ(A) = 3 .

= (2) (3)(

1) = 6 ≠ 0 . So, ρ(A) = 3 .

Note

that there are three non-zero rows.

(ii)

Let A =  . Then A is a matrix of order 3× 3 and ρ(A)

≤ 3.

. Then A is a matrix of order 3× 3 and ρ(A)

≤ 3.

The

only third order minor is |A| =  = (-2) (5) (0) = 0 . So ρ(A) ≤ 2 .

= (-2) (5) (0) = 0 . So ρ(A) ≤ 2 .

There

are several second order minors. We find that there is a second order minor,

for example,  = (-2)(5) = -10 ≠ 0 . So, ρ(A)

= 2.

= (-2)(5) = -10 ≠ 0 . So, ρ(A)

= 2.

Note

that there are two non-zero rows. The third row is a zero row.

(iii)

Let A =  . Then A is a matrix of order 4 × 3 and ρ(A)

≤ 3.

. Then A is a matrix of order 4 × 3 and ρ(A)

≤ 3.

The

last two rows are zero rows. There are several second order minors. We find

that there is a second order minor, for

example,  = (6) (2) = 12 ≠ 0 .

= (6) (2) = 12 ≠ 0 .

So, ρ(A) = 2.

Note

that

there are two non-zero rows. The third and fourth rows are zero rows.

We

observe from the above example that the rank of a matrix in row echelon form is

equal to the number of non-zero rows in it. We

state this observation as a theorem without proof.

Theorem 1.11

The

rank of a matrix in row echelon form is the number of non-zero rows in it.

The rank of a matrix which is not in a row-echelon form, can be

found by applying the following result which is stated without proof.

Theorem 1.12

The

rank of a non-zero matrix is equal to the number of non-zero rows in a

row-echelon form of the matrix.

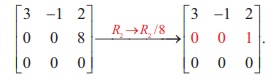

Example 1.17

Find

the rank of the matrix  by reducing it to a row-echelon form.

by reducing it to a row-echelon form.

Solution

Let

A =  . Applying elementary row operations, we get

. Applying elementary row operations, we get

The

last equivalent matrix is in row-echelon form. It has two non-zero rows. So,

ρ (A)= 2.

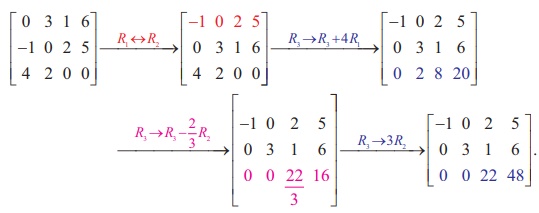

Example 1.18

Find

the rank of the matrix  by reducing it to a row-echelon form.

by reducing it to a row-echelon form.

Solution

Let

A be the matrix. Performing elementary row operations, we get

The

last equivalent matrix is in row-echelon form. It has three non-zero rows. So, ρ(A) = 3 .

Elementary

row operations on a matrix can be performed by pre-multiplying the given matrix

by a special class of matrices called elementary matrices.

Definition 1.7

An elementary matrix is

defined as a matrix which is obtained from an identity matrix by applying only

one elementary transformation.

Remark

If

we are dealing with matrices with three rows, then all elementary matrices are

square matrices of order 3 which are obtained by carrying out only one

elementary row operations on the unit matrix I3. Every

elementary row operation that is carried out on a given matrix A can

be obtained by pre-multiplying A with elementary matrix.

Similarly, every elementary column operation that is carried out on a given

matrix A can be obtained by post-multiplying Awith an elementary matrix. In the present chapter,

we use elementary row operations only.

For

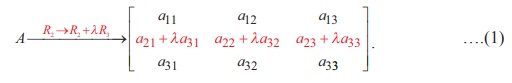

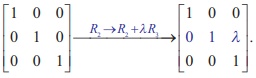

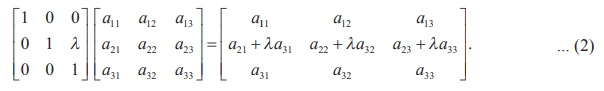

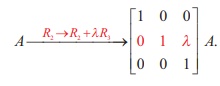

instance, let us consider the matrix A =

Suppose

that we do the transformation R2 →

R2 + λR3 on A, where λ ≠ 0 is a

constant. Then, we get

The

matrix  is an elementary matrix, since we have

is an elementary matrix, since we have

Pre-multiplying

A by  , we get

, we get

From

(1) and (2), we get

So,

the effect of applying the elementary transformation R2 →

R2 + λR3 on A is the same as that of

pre-multiplying the matrix A with the elementary matrix

Similarly,

we can show that

(i) the effect of applying the

elementary transformation R2↔ R3 on A is the

same as that of pre-multiplying the matrix A with the elementary

matrix

(ii)

the effect of applying the elementary transformation R2 →

R2λ on A is the same as that of pre-multiplying the

matrix A with the elementary matrix

We

state the following result without proof.

Theorem 1.13

Every

non-singular matrix can be transformed to an identity matrix, by a sequence of

elementary row operations.

As

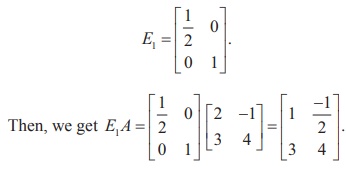

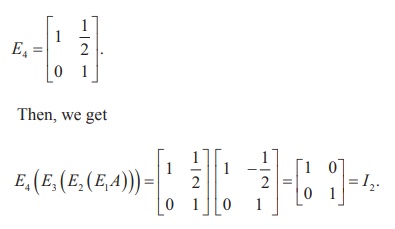

an illustration of the above theorem, let us consider the matrix A =

Then,

|A| = 12+ 3 = 15 ≠ 0. So, A is

non-singular. Let us transform A into I2 by a sequence of elementary row operations. First, we search for a row

operation to make a11 of A as 1. The elementary row operation

needed for this is R1 → (1/2) R1. The

corresponding elementary matrix is

Next,

let us make all elements below a11 of E1A as 0.

There is only one element a21.

The

elementary row operation needed for this is R2

→ R2 + (−3) R1 .

The

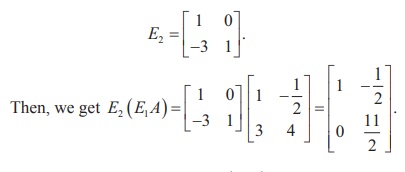

corresponding elementary matrix is E2 =

Next,

let us make a22 of E2(E2A)

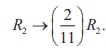

as 1. The elementary row operation needed for this is

The

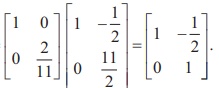

corresponding elementary matrix is E3 =

Then,

we get E3(E2(E1A))

=

Finally,

let us find an elementary row operation to make a12 of E3(E2(E1A)) as 0. The

elementary row operation needed for this is R1 →

R1 + (1/2) R2. The corresponding elementary matrix

is

We

write the above sequence of elementary transformations in the following manner:

Example 1.19

Show

that the matrix  is non-singular and reduce it to the identity matrix by

elementary row transformations.

is non-singular and reduce it to the identity matrix by

elementary row transformations.

Solution

Let

A =  .Then, |A| = 3 (0+2 ) – 1(2+5) + 4(4-0) =

6-7+16 ≠ 0. So, A is non-singular. Keeping the identity matrix as our

goal, we perform the row operations sequentially on A as follows:

.Then, |A| = 3 (0+2 ) – 1(2+5) + 4(4-0) =

6-7+16 ≠ 0. So, A is non-singular. Keeping the identity matrix as our

goal, we perform the row operations sequentially on A as follows:

Gauss-Jordan Method

Let A be

a non-singular square matrix of order n .

Let B be the inverse of A.

Then,

we have AB = BA = In . By

the property of In , we

have A = In A = AIn .

Consider

the equation A = In A …………………………………………..(1)

Since A is

non-singular, pre-multiplying by a sequence of elementary matrices (row

operations) on both sides of (1), A on the left-hand-side of

(1) is transformed to the identity matrix In and

the same sequence of elementary matrices (row operations) transforms In of

the right-hand-side of (1) to a matrix B.

So, equation (1) transforms to In = BA.

Hence, the inverse of A is B. That is, A−1 = B.

Note

If E1 , E2 ,…, Ek are elementary matrices

(row operations) such that (Ek … E2 E1 ) A = In ,

then A−1 = Ek … E2 E1.

Transforming

a non-singular matrix A to the form In by

applying elementary row operations, is called Gauss-Jordan

method. The steps in finding A−1 by Gauss-Jordan method are given below:

Step 1

Augment

the identity matrix In on the right-side of A to

get the matrix [ A | In ] .

Step 2

Obtain

elementary matrices (row operations) E1 , E2 ,…, Ek such that (Ek … E2 E1 ) A = In .

Apply E1 , E2 ,…, Ek on [ A | In ] .

Then [(Ek …… E2 E1 ) A | (Ek ….. E2 E1 ) In].

That is, [In | A−1 ].

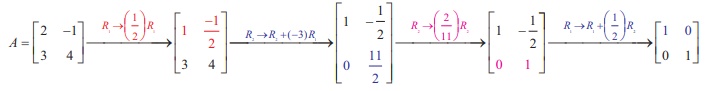

Example 1.20

Find

the inverse of the non-singular matrix A =  , by Gauss-Jordan method.

, by Gauss-Jordan method.

Solution

Applying

Gauss-Jordan method, we get

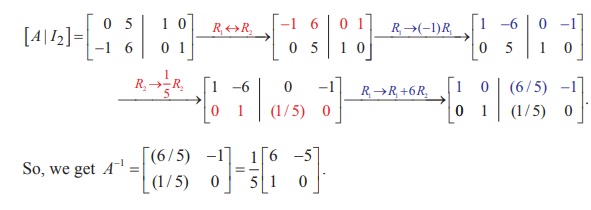

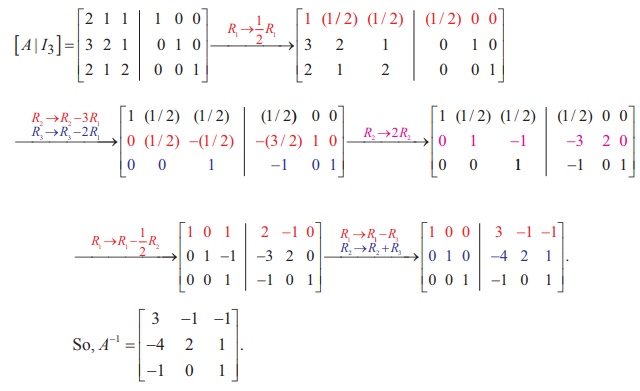

Example 1.21

Find

the inverse of A =  by Gauss-Jordan method.

by Gauss-Jordan method.

Solution

Applying

Gauss-Jordan method, we get

Applications of Matrices: Solving

System of Linear Equations

One

of the important applications of matrices and determinants is solving of system

of linear equations. Systems of linear equations arise as mathematical models

of several phenomena occurring in biology, chemistry, commerce, economics,

physics and engineering. For instance, analysis of circuit theory, analysis of

input-output models, and analysis of chemical reactions require solutions of

systems of linear equations.

System of Linear Equations in Matrix Form

A

system of m linear equations in n unknowns is

of the following form:

a11x1 + a12x2 + a13x3 +

……… + a1nxn + = b1

a21x1 + a22x2 + a23x3 +

……… + a2nxn + = b2

a31x1 + a32x2 + a3x3 +

……… + a3nxn + = b3

…..

…. ….. ….. …..

...

Am1x1 + am2x2 + am3x3 +

……… + amnxn + = bm

where the coefficients aij ,

i = 1, 2, …. , m; j = 1, 2,….., n and bk ,

k = 1, 2,….., m are constants. If all the bk 's

are zeros, then the above system is called a homogeneous

system of linear equations. On the other

hand, if at least one of the bk 's is non-zero, then the above system is called

a non-homogeneous system of linear equations. If there exist values α1 , α2 , ….. , αn for x1, x2 , …. , xn respectively which satisfy

every equation of (1), then the ordered n − tuple (α1 , α2 , …. , αn ) is

called a solution of (1). The above system (1) can be put in a matrix form as

follows:

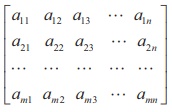

Let

A =  be the m x n matrix

formed by the coefficients of x1, x2 , x3,….

, xn . The first row of A

is formed by the coefficients of x1, x2 , x3,….

, xn in the same order in

which they occur in the first equation. Likewise, the other rows of A are formed. The first column is formed by the

coefficients of x1 in the m equations

in the same order. The other columns are formed in a similar way.

be the m x n matrix

formed by the coefficients of x1, x2 , x3,….

, xn . The first row of A

is formed by the coefficients of x1, x2 , x3,….

, xn in the same order in

which they occur in the first equation. Likewise, the other rows of A are formed. The first column is formed by the

coefficients of x1 in the m equations

in the same order. The other columns are formed in a similar way.

Let

X = ![]() be the n x1 order column matrix formed by

the unknowns x1, x2 , x3,….

, xn

be the n x1 order column matrix formed by

the unknowns x1, x2 , x3,….

, xn

Let

B = ![]() be the m x 1 order column matrix formed by the right-hand

side constants b1, b2 , b3 ,

…. , bm .

be the m x 1 order column matrix formed by the right-hand

side constants b1, b2 , b3 ,

…. , bm .

Then

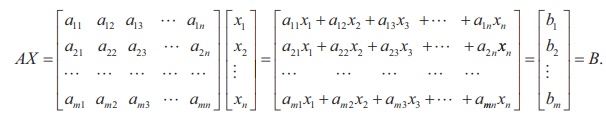

we get

Then

AX = B is a matrix equation involving matrices and it is called the matrix form of the system of

linear equations (1). The matrix A is called the coefficient matrix of the system and the

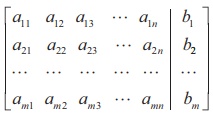

matrix

is called the augmented matrix of

the system. We denote the augmented matrix by [ A | B

].

As

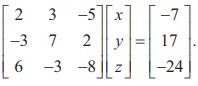

an example, the matrix form of the system of linear equations

2x

+ 3y - 5z + 7 = 0, 7 y + 2z - 3x = 17, 6x - 3y - 8z + 24 = 0 is

Solution to a System of Linear equations

The

meaning of solution to a system of linear equations can be understood by

considering the following cases :

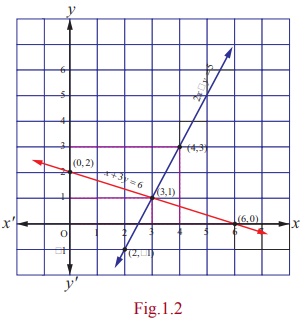

Case (i)

Consider

the system of linear equations

2x

- y = 5 , ...

(1)

x

+ 3y = 6 . ...

(2)

These

two equations represent a pair of straight lines in two dimensional analytical

geometry (see the Fig. 1.2). Using (1), we get

x = [5 + y] / 2

(3)

Substituting

(3) in (2) and simplifying, we get y = 1.

Substituting

y = 1 in (1) and simplifying, we get x = 3 .

Both

equations (1) and (2) are satisfied by x = 3 and y = 1.

That is, a solution of (1) is also a solution of (2).

So, we say that the system is consistent and has unique solution

(3,1) . The point (3,1) is

the point of intersection of the two lines 2x - y =

5 and x + 3y = 6 .

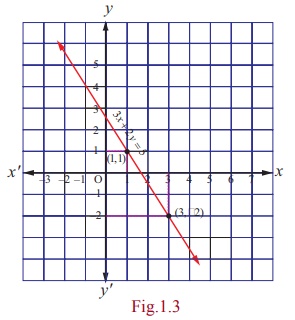

Case (ii)

Consider

the system of linear equations

3x

+ 2 y = 5 , ...

(1)

6x

+ 4 y = 10 ... (2)

Using

equation (1), we get

x

= [5 - 2 y] / 3 ... (3)

Substituting

(3) in (2) and simplifying, we get 0 = 0.

This

informs us that equation (2) is an elementary transformation of equation (1).

In fact, by dividing equation (2) by 2, we get equation (1). It is not possible

to find uniquely x and y with just a single equation (1).

So

we are forced to assume the value of one unknown, say y = t , where t is any real number.

Then,

x = [5 - 2t] /3. The two equations (1) and (2) represent one and only one

straight line (coincident lines) in two dimensional analytical geometry (see

Fig. 1.3) . In other words, the system is consistent

(a solution of (1) is also a solution of (2)) and has infinitely many

solutions, as t can assume any real value.

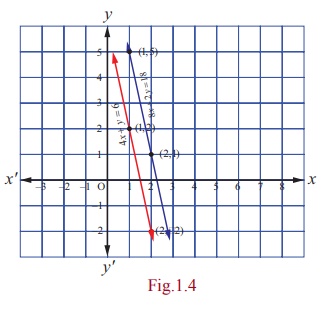

Case (iii)

Consider

the system of linear equations

4x

+ y = 6 , ... (1)

8x

+ 2 y = 18 . ...

(2)

Using

equation (1), we get

x

= [6 – y] / 4 ... (3)

Substituting

(3) in (2) and simplifying, we get 12 = 18 .

This

is a contradicting result, which informs us that equation (2) is inconsistent

with equation (1). So, a solution of (1) is not a solution of (2).

In

other words, the system is inconsistent and has no solution. We note that the

two equations represent two parallel straight lines (non-coincident) in two

dimensional analytical geometry (see Fig. 1.4). We know that two non-coincident

parallel lines never meet in real points.

Note

(1)

Interchanging any two equations of a system of linear equations does not alter

the solution of the system.

(2)

Replacing an equation of a system of linear equations by a non-zero constant

multiple of itself does not alter the solution of the system.

(3)

Replacing an equation of a system of linear equations by addition of itself

with a non-zero multiple of any other equation of the system does not alter the

solution of the system.

Matrix Inversion Method

This

method can be applied only when the coefficient matrix is a square matrix and

non-singular.

Consider

the matrix equation

AX = B , … (1)

where A is a

square matrix and non-singular. Since A is non-singular, A−1 exists and A−1 A = AA−1 = I.

Pre-multiplying both sides of (1) by A−1, we get A−1 ( AX ) = A−1B. That is, ( A−1 A) X = A−1B. Hence, we get X = A−1B.

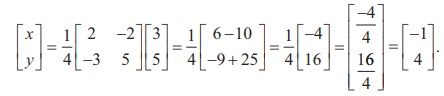

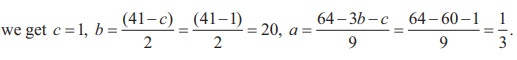

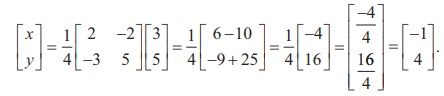

Example 1.22

Solve

the following system of linear equations, using matrix inversion method:

5x + 2 y = 3,

3x + 2 y = 5

.

Solution

The

matrix form of the system is AX = B , where

We

find |A| =  = 10 - 6= 4 ≠ 0. So, A−1 exists and A−1 =

= 10 - 6= 4 ≠ 0. So, A−1 exists and A−1 =

Then,

applying the formula X = A−1B , we get

So

the solution is (x = −1, y = 4).

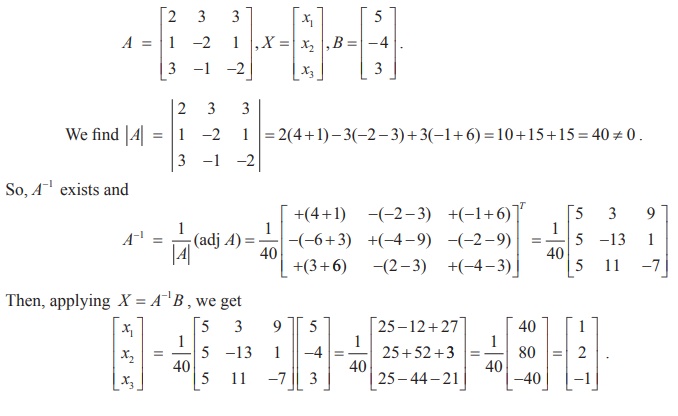

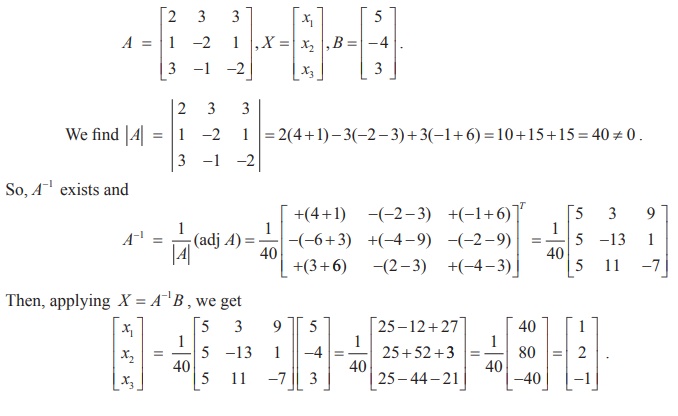

Example 1.23

Solve

the following system of equations, using matrix inversion method:

2x1 +

3x2 + 3x3 = 5,

x1 – 2x2 + x3 =

-4,

3x1 –

x2 – 2x3 = 3

Solution

The

matrix form of the system is AX = B,where

So,

the solution is ( x1 =

1, x2 = 2, x3 =

−1) .

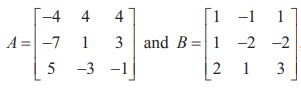

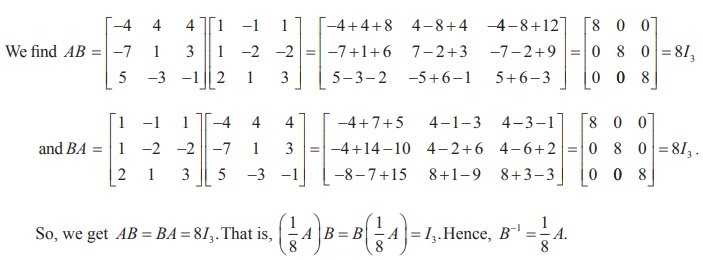

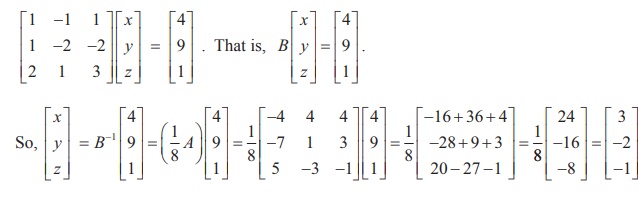

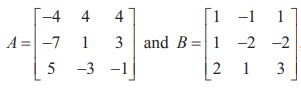

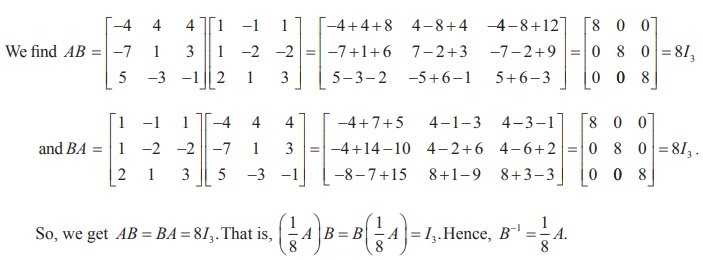

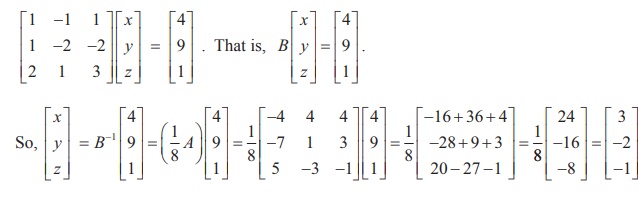

Example 1.24

If  , find the products AB and BA and hence solve the system of

equations x − y + z = 4, x – 2y – 2z = 9, 2x + y +3z =1.

, find the products AB and BA and hence solve the system of

equations x − y + z = 4, x – 2y – 2z = 9, 2x + y +3z =1.

Solution

Writing

the given system of equations in matrix form, we get

Hence,

the solution is (x = 3, y = - 2, z =

−1).

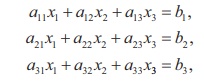

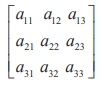

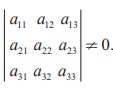

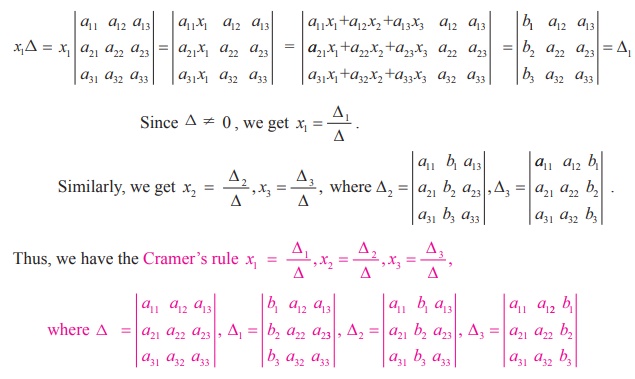

CRAMER’S RULE

This

rule can be applied only when the coefficient matrix is a square matrix and

non-singular. It is explained by

considering the following system of equations:

where the coefficient

matrix  is non-singular. Then

is non-singular. Then

Let

us put Δ =  . Then, we have

. Then, we have

Note

Replacing the first column

elements a11 , a21 , a31 of Δ with b1 , b2 , b3 respectively,

we get Δ1. Replacing the second column elements a12 , a22 , a32 of Δ with b1 , b2 , b3 respectively,

we get Δ2 . Replacing the third column elements a13 , a23 , a33 of Δ with b1 , b2 , b3 respectively,

we get Δ3.

If Δ = 0,

Cramer’s rule cannot be applied.

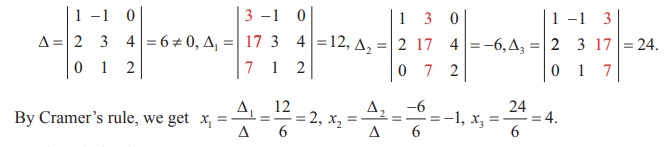

Example 1.25

Solve,

by Cramer’s rule, the system of equations

x1 − x2 = 3, 2x1 + 3x2 + 4x3 = 17, x2 + 2x3 = 7.

Solution

First

we evaluate the determinants

So,

the solution is (x1 =

2, x2 = - 1, x3 =

4).

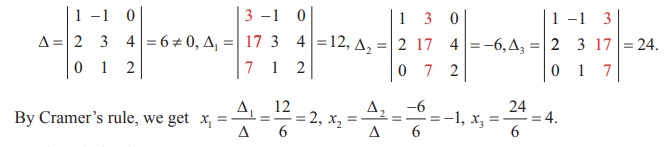

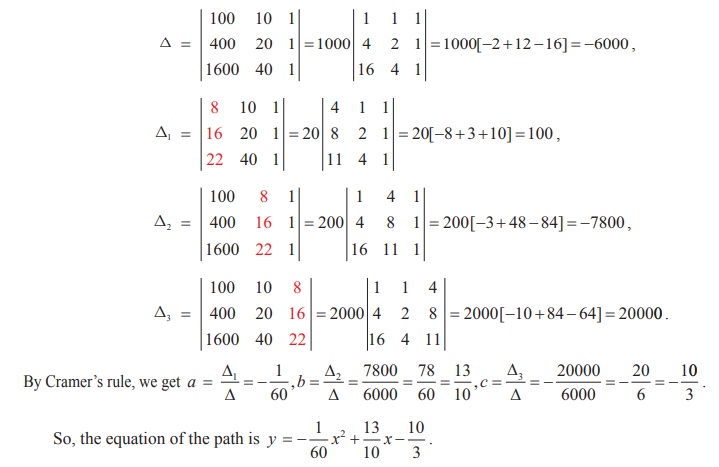

Example 1.26

In a T20 match, Chennai

Super Kings needed just 6 runs to win with 1 ball left to go in the last over.

The last ball was bowled and the batsman at the crease hit it high up. The ball

traversed along a path in a vertical plane and the equation of the path

is y = ax2 + bx + c with

respect to a xy -coordinate

system in the vertical plane and the ball

traversed through the points

(10,8), (20,16), (30,18) , can you conclude that Chennai Super Kings won

the match?

Justify

your answer. (All distances are measured in metres

and the meeting point of the plane of the path with the farthest boundary

line is (70, 0).)

Solution

The path y = ax2 + bx + c passes

through the points (10,8), (20,16), (40, 22) . So, we get the system of equations 100a + 10b + c = 8, 400a + 20b + c = 16,1600a + 40b + c = 22. To apply Cramer’s rule, we find

When x =

70, we get y = 6. So, the ball went by 6 metres

high over the boundary line and it is impossible for a fielder standing even just before the boundary line to jump and catch the ball. Hence the ball went for a super six

and the Chennai Super Kings won the match.

Gaussian Elimination Method

This

method can be applied even if the coefficient matrix is singular matrix and

rectangular matrix. It is essentially the method of substitution which we have

already seen. In this method, we transform the augmented matrix of the system

of linear equations into row-echelon form and then by back-substitution, we get

the solution.

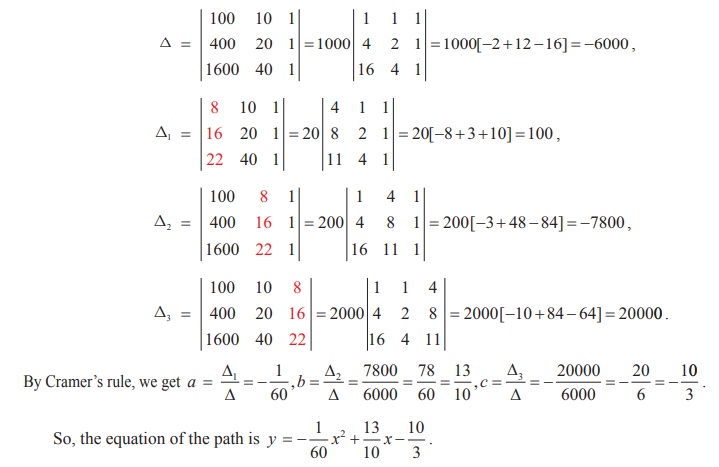

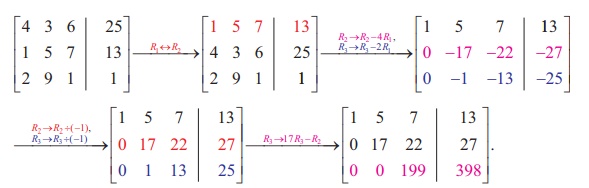

Example 1.27

Solve

the following system of linear equations, by Gaussian elimination method :

4x + 3y + 6z = 25, x + 5 y + 7z = 13,

2x + 9 y + z = 1.

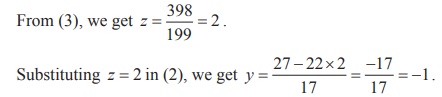

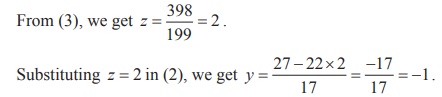

Solution

Transforming

the augmented matrix to echelon form, we get

The

equivalent system is written by using the echelon form:

x

+ 5y + 7z = 13 , … (1)

17y

+ 22z = 27 , … (2)

199z

= 398 . … (3)

Substituting

z = 2, y = -1 in (1), we get x = 13 - 5 ×

(−1 ) − 7 × 2 = 4 .

So,

the solution is ( x =4, y = - 1, z = 2 ).

Note. The above method of going

from the last equation to the first equation is called the method of

back substitution.

Example 1.28

The upward speed v(t)

of a rocket at time t is approximated by v(t) = at2 + bt + c, 0 ≤ t ≤ 100 where a, b, and c are

constants. It has been found that the speed at times t = 3, t = 6 , and t = 9 seconds are respectively, 64, 133, and 208

miles per second respectively. Find the speed at time t

= 15 seconds. (Use Gaussian elimination method.)

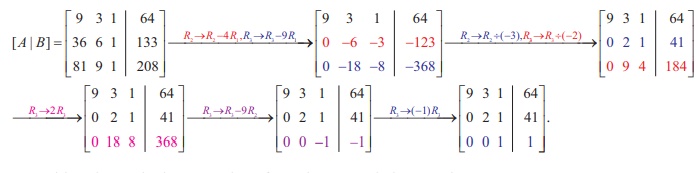

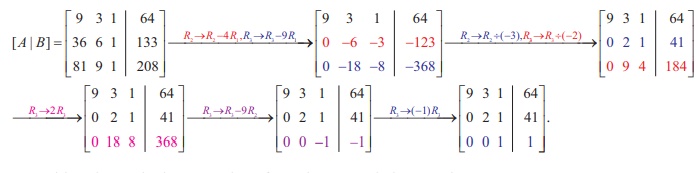

Solution

Since v(3) =64, v(6) = 133 and v(9)

= 208 , we get the following system of linear equations

9a

+3b + c = 64 ,

36a

+ 6b + c = 133,

81a

+ 9b + c = 208 .

We

solve the above system of linear equations by Gaussian elimination method.

Reducing

the augmented matrix to an equivalent row-echelon form by using elementary row operations, we get

Writing

the equivalent equations from the row-echelon matrix, we get

9a

+ 3b + c = 64, 2b + c = 41, c= 1.

By

back substitution, we get

So,

we get v (t) = 1/3 t2 +

20t + 1.

Hence, v(15) = 1/3 (225) + 20(15) + 1 = 75 + 300 + 1 = 376

Solution To A System Of Linear

Equations

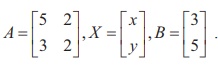

Example 1.22

Solve

the following system of linear equations, using matrix inversion method:

5x + 2 y = 3,

3x + 2 y = 5

.

Solution

The

matrix form of the system is AX = B , where

We

find |A| =  = 10 - 6= 4 ≠ 0. So, A−1 exists

and A−1 =

= 10 - 6= 4 ≠ 0. So, A−1 exists

and A−1 =

Then,

applying the formula X = A−1B , we get

So

the solution is (x = −1, y = 4).

Example 1.23

Solve

the following system of equations, using matrix inversion method:

2x1 +

3x2 + 3x3 = 5,

x1 –

2x2 + x3 = -4,

3x1 –

x2 – 2x3 = 3

Solution

The

matrix form of the system is AX = B,where

So,

the solution is ( x1 = 1, x2 =

2, x3 = −1) .

Example 1.24

If  , find the products AB and BA and hence solve the system of

equations x − y + z = 4, x – 2y – 2z = 9, 2x + y +3z =1.

, find the products AB and BA and hence solve the system of

equations x − y + z = 4, x – 2y – 2z = 9, 2x + y +3z =1.

Solution

Writing

the given system of equations in matrix form, we get

Hence,

the solution is (x = 3, y = - 2, z =

−1).

(Ii) Cramer’s Rule

Example 1.25

Solve,

by Cramer’s rule, the system of equations

x1 − x2 = 3,

2x1 + 3x2 + 4x3 = 17, x2 + 2x3 = 7.

Solution

First

we evaluate the determinants

So,

the solution is (x1 = 2, x2 =

- 1, x3 = 4).

Example 1.26

In a T20 match, Chennai

Super Kings needed just 6 runs to win with 1 ball left to go in the last over.

The last ball was bowled and the batsman at the crease hit it high up. The ball

traversed along a path in a vertical plane and the equation of the path

is y = ax2 + bx + c with

respect to a xy -coordinate

system in the vertical plane and the ball

traversed through the points

(10,8), (20,16), (30,18) , can you conclude that Chennai Super Kings won

the match

Justify

your answer. (All distances are measured in metres

and the meeting point of the plane of the path with the farthest boundary

line is (70, 0).)

Solution

The path y = ax2 + bx + c passes

through the points (10,8), (20,16), (40, 22) . So, we get the system of equations 100a + 10b + c = 8, 400a + 20b + c= 16,1600a + 40b + c = 22. To apply Cramer’s rule, we find

When x =

70, we get y = 6.

So, the ball went by 6 metres high over the boundary line and it is impossible for

a fielder standing even just before theboundary

line to jump and catch the ball.

Hence the ball went for a

super six and the Chennai Super Kings won the match.

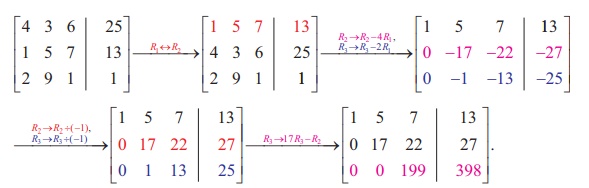

(iii) Gaussian Elimination Method

Example 1.27

Solve

the following system of linear equations, by Gaussian elimination method :

4x + 3y + 6z = 25, x + 5 y + 7z = 13,

2x + 9 y + z = 1.

Solution

Transforming

the augmented matrix to echelon form, we get

The

equivalent system is written by using the echelon form:

x

+ 5y + 7z = 13 , … (1)

17y

+ 22z = 27 , … (2)

199z

= 398 . … (3)

Substituting

z = 2, y = -1 in (1), we get x = 13 - 5 ×

(−1 ) − 7 × 2 = 4 .

So,

the solution is ( x =4, y = - 1, z = 2 ).

Note. The above method of going

from the last equation to the first equation is called the method of

back substitution.

Example 1.28

The upward speed v(t)

of a rocket at time t is approximated by v(t) = at2 + bt + c, 0 ≤ t ≤ 100 where a, b, and c are

constants. It has been found that the speed at times t = 3, t = 6 , and t = 9 seconds are respectively, 64, 133, and 208

miles per second respectively. Find the speed at time t

= 15 seconds. (Use Gaussian elimination method.)

Solution

Since v(3) =64, v(6) = 133 and v(9)

= 208 , we get the following system of linear equations

9a

+3b + c = 64 ,

36a

+ 6b + c = 133,

81a

+ 9b + c = 208 .

We

solve the above system of linear equations by Gaussian elimination method.

Reducing

the augmented matrix to an equivalent row-echelon form by using elementary row operations, we get

Writing

the equivalent equations from the row-echelon matrix, we get

9a

+ 3b + c = 64, 2b + c = 41, c= 1.

By

back substitution, we get

So,

we get v (t) = 1/3 t2 + 20t + 1.

Hence, v(15) = 1/3 (225) + 20(15) + 1 = 75 + 300 + 1 = 376

Applications

Of Matrices: Consistency Of System Of Linear Equations

By Rank Method

In second previous section, we have already defined consistency of

a system of linear equation. In this section,

we investigate it by using rank method. We state the following theorem without

proof:

Theorem 1.14 (Rouché - Capelli

Theorem)

A

system of linear equations, written in the matrix form as AX = B,

is consistent if and only if the rank of the coefficient matrix is equal to the

rank of the augmented matrix; that is, ρ ( A) = ρ ([ A | B]).

We

apply the theorem in the following examples.

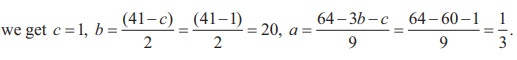

Non-homogeneous Linear Equations

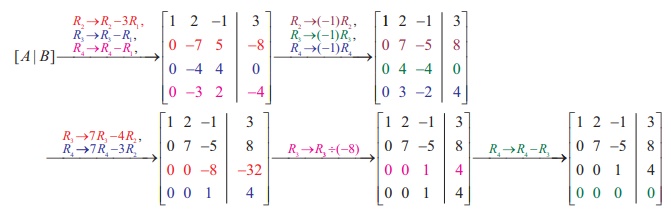

Example 1.29

Test

for consistency of the following system of linear equations and if possible

solve:

x + 2 y − z =

3, 3x − y + 2z = 1, x −

2 y + 3z = 3, x − y + z +1

= 0 .

Solution

Here

the number of unknowns is 3.

The

matrix form of the system is AX = B, where

Applying

Gaussian elimination method on [ A | B], we get

There

are three non-zero rows in the row-echelon form of [A | B].So, ρ ([A | B] ) . = 3

So,

the row-echelon form of A is  . There are three non-zero rows in it. So ρ(A)

= 3.

. There are three non-zero rows in it. So ρ(A)

= 3.

Hence,

ρ(A) = ρ([ A | B ]) = 3.

From

the echelon form, we write the equivalent system of equations

x

+ 2y –z =3, 7y-5z = 8, z=4, 0=0.

The

last equation 0 = 0 is meaningful. By the method of back substitution, we get

z

= 4

7y

- 20 = 8 ⇒ y = 4 ,

x

= 3 - 8 + 4 ⇒ x = −1.

So,

the solution is (x = −1, y = 4, z = 4)

.(Note that A is not a square matrix.)

Here

the given system is consistent and the solution is unique.

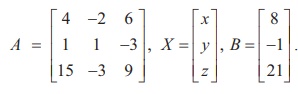

Example 1.30

Test

for consistency of the following system of linear equations and if possible

solve:

4x −

2 y + 6z = 8, x + y −

3z = −1, 15x − 3y + 9z =

21.

Solution

Here the number of unknowns is 3.

The

matrix form of the system is AX = B, where

Applying

elementary row operations on the augmented matrix[ A | B],

we get

So, ρ ( A)

= ρ ([ A | B]) = 2 < 3.

From the echelon form, we get the equivalent equations

x + y - 3z =

-1, y - 3z = -2 , 0 = 0 .

The equivalent system has two non-trivial equations and three unknowns. So, one of the unknowns should be fixed at our

choice in order to get two equations for the other two unknowns. We fix z arbitrarily

as a real number t , and

we get y = 3t -

2, x = -1- (3t - 2) + 3t = 1. So, the solution is ( x =1, y = 3t - 2, z = t ), where t is real . The above solution set is a one-parameter family of solutions.

Here, the given system is consistent and has infinitely many solutions which form a one parameter

family of solutions.

Note

In the above example, the square matrix A is singular and so matrix inversion method cannot be applied to solve the system of equations. However,Gaussian elimination method is applicable and we

are able to decide whether the system is consistent or not. The next example

also confirms the supremacy of Gaussian elimination method over other methods.

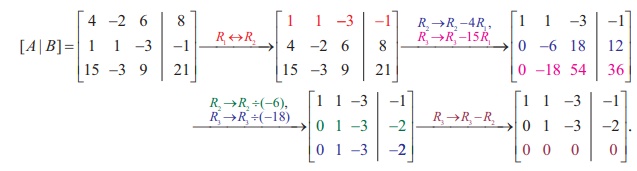

Example 1.31

Test

for consistency of the following system of linear equations and if possible

solve:

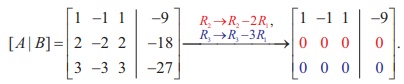

x − y + z = −9, 2x −

2 y + 2z = −18, 3x − 3y +

3z + 27 = 0.

Solution

Here the number of unknowns is 3.

The

matrix form of the system is AX = B, where

Applying

elementary row operations on the augmented matrix[ A | B],

we get

So, ρ ( A) = ρ ([ A | B])

= 1 < 3.

From the echelon form, we get the equivalent equations x - y + z = -9, 0 = 0, 0 = 0.

The equivalent system has one non-trivial equation and three unknowns.

Taking y = s, z = t arbitrarily, we get x - s + t = -9; or x = -9 + s - t.

So,

the solution is ( x =

-9 + s - t, y = s, z = t ), where s and t are

parameters.

The above solution set is a two-parameter family of solutions.

Here,

the given system of equations is consistent and has infinitely many solutions

which form a two parameter family of solutions.

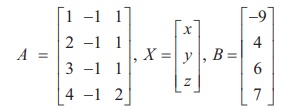

Example 1.32

Test

the consistency of the following system of linear equations

x − y + z = −9, 2x − y + z =

4, 3x − y + z = 6, 4x − y +

2z = 7.

Solution

Here the number of unknowns is 3.

The matrix form of the system of equations is AX = B,

where

Applying elementary row operations on the augmented matrix

[A|B], we get

So, ρ (A) = 3 and ρ ([ A |

B]) = 4. Hence ρ ( A) ≠ ρ ([ A | B]).

If we write the equivalent system of equations using the echelon

form, we get

x - y + z = -9, y - z = 22, z = -23, 0 = -11.

The last equation is a contradiction.

So the given system of equations is inconsistent and has no solution.

By Rouché - Capelli theorem, we have the following rule:

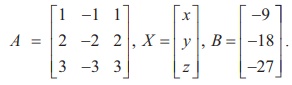

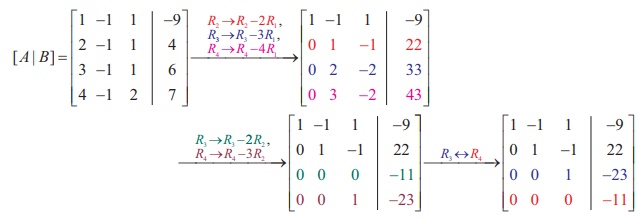

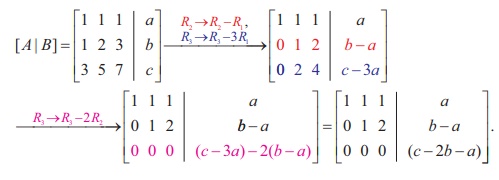

Example 1.33

Find

the condition on a, b and c so that the following system of linear equations

has one parameter family of solutions: x + y + z = a, x + 2 y + 3z = b, 3x + 5

y + 7z = c.

Solution

Here

the number of unknowns is 3.

The

matrix form of the system is AX = B, where A =

Applying

elementary row operations on the augmented matrix [ A | B],

we get

In order that the system should have one parameter family of

solutions, we must have ρ ( A)

= ρ ([ A, B]) = 2. So, the third

row in the echelon form should be a zero row.

So, c -

2b - a = 0 ⇒ c = a +

2b.

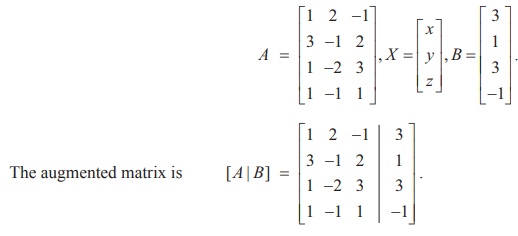

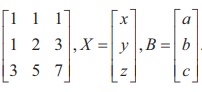

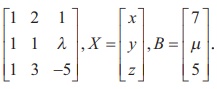

Example 1.34

Investigate

for what values of λ and μ the

system of linear equations

x + 2 y + z =

7, x + y + λ z = μ, x +

3y − 5z = 5 has

(i) no solution (ii) a unique

solution (iii) an infinite number of solutions.

Solution

Here the number of unknowns is 3.

The

matrix form of the system is AX = B, where A =

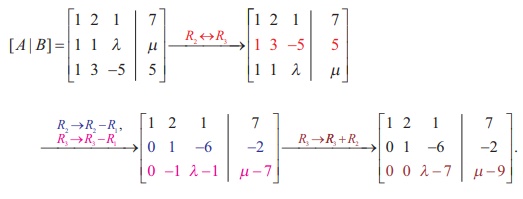

Applying

elementary row operations on the augmented matrix [ A | B],

we get

(i) If λ = 7 and μ

≠ 9 , then ρ (A) = 2 and ρ ([ A | B]) = 3. So ρ ( A) ≠ ρ ([ A | B]). Hence the given system is

inconsistent and has no solution.

(ii)

If λ ≠ 7 and m is any real number,

then ρ (A) = 3 and ρ ([ A | B]) = 3.

So

ρ (A) = ρ ([ A | B]) = 3 = Number of

unknowns. Hence the given system is consistent and has a unique solution.

(iii)

If λ = 7 and μ = 9, then ρ(A) = 2

and ρ ([ A | B]) = 2.

So,

ρ(A) = ρ ([ A | B]) = 2 < Number of

unknowns. Hence the given system is consistent and has infinite number of solutions.